Zig as the foundation for a next generation game engine

Table of contents

1. Introduction

Until recent years, before Indie dev boom, most of the engines were proprietary and were tightly coupled to games that were built on top of them. Developers wishing to create a game had to do a thorough research about a target platform and write a lot of basic functionality that is now shipped in most engines by default. Appearance of Steam and Xbox Live Arcade in mid two thousands was one of the stepping stones for indie game development and it “pulled underground indie devs up into the sun” by taking care of the game distribution.1 Naturally, this caused the rise in demand for game engines. However, most of the engines at the time were proprietary, and companies behind them weren’t keen on sharing their secrets, as the industry was still in its adolescence. As a result, they were never properly researched.2

Almost all relevant game engines are closed source which means that details of how huge game projects are managed are not available to the researchers. There are some books on this topic, but they don’t provide high level architecture overview, only low level implementation. Most of the recent academic works agree that not a lot is known about the game development process, and most of the relevant information about big and successful engines come from postmortems, blogs or other media where individual developers share their experiences. The fact that they are not thoroughly researched nor defined yet and is a source of much frustration.3

Additionally, the rebirth of handhelds with Nintendo Switch, Steam Deck and other platforms means that game developers need to take more care about game optimizations since the computing power of those devices is more limiting compared to bulky PCs and consoles. Although the semiconductor industry has been good at identifying near and long term bottlenecks of Moor’s Law4, some chip-makers claim that Moor’s Law is dead, justifying the price hike of graphics cards.5 Thus, it is important to find new ways for optimizing video games within the software, instead of relying on the hardware to handle the ever-increasing load. Popular engines like Unreal and Unity allow for a faster development cycle by limiting the access to the engine’s underlying systems.

“It is our belief that the manner in which low-level issues influence architectural design is intrinsically linked to the fact that technology changes at a very fast rate”.6 For these reasons, it is important to periodically evaluate those systems and to look for possible improvements. One of them could be switching to another language. Most of the modern games are written in C++ and C3, but both of them are quite old, and were designed during a different age and for different kind of hardware that is not common nowadays - dedicated graphic cards did not exist and 4 kilobytes of RAM was a norm.

In a present day, game development cycle is very complex, especially in triple-A studios. Generating the assets and writing the code is just a tine fraction off it. Second most common kind of problem faced in the field is related to technical issues.7 Creation of a game starts from pre-production stage, with writing a game design document and scribbling the concept art. It is during this period when a project should decide what kind of engine will be used judging from the core gameplay features. A right decision at the early stage might reduce the amount of technical problems faced later by removing the excessive functionality that is common in general purpose engines like Unity, thus simplifying its usage.

In order to find possible improvements in current game development process, the following research questions will need to be answered: What are the fundamental components of a game engine? and How does a programming language impact the game development process? To answer them the following research tasks need to be performed:

- compare the features of popular game engines;

- analyze the architecture of open-source game engines and identify common components;

- compare C, C++, Rust and Zig in several features such as semantics, package management, build system, etc.;

The paper is structured as follows:

- Section 1 is a theoretical part that exposes previous researches and explains the terminology;

- Section 2.1 compares Unity and Unreal Engine;

- Section 2.2 analyzes existing open source game engines and their trends;

- Section 2.3 analyzes C, C++, Rust and Zig programming languages;

- Section 3 discusses our results and threats to their validity;

- Section 4 concludes.

1.1. Theoretical part

As mentioned earlier, academic papers that touch the topic of game engines concur that it is severely underresearched.7,8,9,2 “This lack of literature and research regarding game engine architectures is perplexing”.6 By the time of writing of this paper, several works about game engines internal architecture have been published, however, to the best of my knowledge, none has discussed the importance of a programming language. Here are the most relevant studies for our exploration.

Anderson et al. do not perform a research, but introduce fundamental questions that need to be considered when designing the architecture of a game engine.6 Although dated, this is a great introductory resource that distills the state of research of game engines. They highlight the lack of proper terminology and game development “language”, as well as the lack of clear boundaries between a game and its engine. Authors encourage classifying common engine components, investigating the connection between low-level issues and top-level engine implementation, and identifying the best practices in a field. A paper also mentions an “Uberengine”, a theoretical engine that allows to create any kind of game regardless of genre. We will come back to this study in Section 2.2.

A comprehensive book by Gregory 10 about the engine architecture is referenced in multiple studies. He proposes a “Runtime Engine Architecture”, in which a game engine consists of 15 runtime components with a clear dependency hierarchy. We take a close look at them and debate possible modifications in Section 2.2.1. This book finds an optimal middle ground between a high-level, broad description of how those modules interact with each other, and their detailed implementations. Most of the example code is written in C++, hence object-oriented in nature.

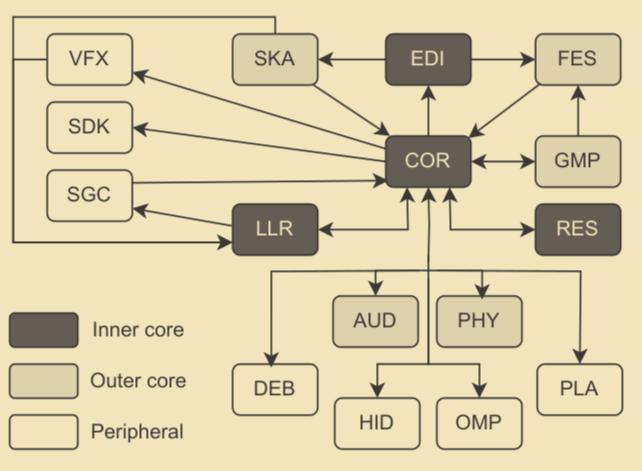

Ullmann et al.11 used this model as a target reference when exploring the architecture of three popular open source game engines: Cocos2d-x, Godot, and Urho3D. They added a separate World Editor (EDI) subsystem “because it [World Editor] directly impacts game developers’ work.” Godot was found to have all 16 out of 16 subsystems, while Cocos2d-x and Urcho3D 13 and 12 respectively. However, the missing functionality was not absent, but rather scattered among other subsystems. They also found which subsystems are more often coupled with one another: COR, SGC and LLR. COR file are helper tools that are used throughout the system. “Video games are highly dependent on visuals, and graphics are a cross-cutting concern, therefore it is no surprise that so many subsystems depend on LLR and SGC”.11 Other commonly coupled systems were VFX, FES, RES and PLA.

In their next paper12, they sampled 10 engines and found that the top-five subsystems were: Core (COR), Low-Level Renderer (LLR), Resources (RES), World Editor (EDI) and Front End (FES) tied with Platform Independence Layer (PLA). Those subsystems “act as a foundation for game engines because most of the other subsystems depend on them to implement their functionalities”. A visualized dependency graph is shown below. There are, however, other architectures used in proprietary mainstream engines, of which very little is know, and the suggested architecture is only one of the possibilities.

A breakdown of game development problems was performed by Politowski et al.7, where they determined that the second most common kind of problem is “technical”, sitting at 12%. First being “Design” problems - 13%, and third - “Team” with 10%. Addressing the technical issues with game engines tackles the second most common kind of problem faced in a game-development industry. This is a hint for us which parts of the next generation game engine need to be addressed.

A paper from Nederlands, written by Gunkel at al.13 describes the technical requirements of XR architecture.

(XR) Extended Reality - collective name for Virtual Reality, Augmented Reality and Mixed Reality.

One of the drawbacks of contemporary game engines is that they are designed to work with static meshes and not the environment around a user, which needs to be acquired in real-time. This problem is being solved by Spatial Computing - “widely autonomous operations to understand the environment and activities of the users and thus building the main enabler for any AR technology”. There is also a need for a metadata format that describes how and where to place an entity in a scene. The most appropriate one is glTF. “GLTF is a royalty-free format that can both describe the composition of 2D and 3D media data, as well as directly compress assets in its binary format… It is however yet to be seen if it can be established as a main entity placement descriptive format for XR”. Last point is about Remote Rendering. To render a scene with proper lighting, shadows and, perhaps, ray-tracing, a substantial processing power is needed. They conclude that game engines and multimedia orchestration “require a closer synergy, optimizing processes at various levels”.

Mark O. Riedl14 describes some of the common problems with AI in game engines. He breaks down AI problems into 2 categories: pathfinding and decision-making; and introduces 2 strategies for decision-making: finite-state machines and behavior trees. AI module takes the responsibility of datamining - analyzing how players interact with a game. This data can be used to guide the development of patches, new content, or make business decisions.

Two papers explore game-development process as a whole. Aleem et al.2 describe in thorough details common problems faced in pre-production, production and post-production phases. Hussain et al.8 define common problems during a project life-cycle, and, similarly to Politowski et al., find that the biggest contributor to the problems is management: “…scope problems, for instance, feature creep - initial scope of the project is inadequately defined, and consequent development entails addition of new functionalities which becomes an issue for requirements management”. An important practice that helps aleviating this problem is using a Game Design Document. Additionally, teams that tried incorporating Model-Based development report some success:

model-driven development here is to abstract away the finer details of the game. This is done with high-level models such as structure and behavior diagrams as well as control diagrams. … it allows management to gain at least a rudimentary understanding of the game design and help alleviate the communication problems encountered in previous development methodologies.8

Toftedahl and Engström15 in their work discovered that as of 2018 “Unity is the engine used in approximately 47 % of the games published on Itch.io”. While on Steam it comes as second (13.2%) after Unreal Engine (25.6%). They categorize game engines into 4 types:

- Core Game Engine: collection of product facing tools used to compile games to be executed on target platforms. e.g. id Tech 3, Unity core;

- Game Engine: a piece of software that contains a core engine and an arbitrary number of user facing tools e.g Raylib, Bevy;

- General Purpose Game Engine: a game engine targeted at a broad range of game genres e.g. Unity, Unreal, Godot;

- Special Purpose Game Engine: a game engine designed to create games of a specific genre e.g. GameMaker, Twine.

Because they did not quantify the exact amount of user facing tools required for a Game Engine, this research can be hardly called satisfactory and only causes ambiguity. In theory, any single utility added to a Core Game Engine makes it a Game Engine. Additionally, their definition of Core Game Engine is in contradiction with Gregory’s “Runtime Engine Architecture” where Core files are “useful software utilities” (chpt. 1.6.6)10. Unity core contains several packages including a renderer and UI16, meanwhile Gregory has a “Low-level Renderer” and “Front End” separate from “Core”. As for the Special Purpose Game Engine, it can be considered as an engine that compiles only one kind of game but with different configurations. In which case, a mod for Minecraft can be considered a game that was produced using Minecraft engine.

1.2. Game vs Non-game software

In their work “Are Game Engines Software Frameworks? A Three-perspective Study”3, Politowski et al. have explored whether game engines share similar characteristics with software frameworks by comparing 282 most popular open source engines and 282 most popular frameworks on GitHub.

Game engines are slightly larger in terms of size and complexity and less popular and engaging than traditional frameworks. The programming languages of game engines differ greatly from that of traditional frameworks. Game engine projects have shorter histories with less releases. Developers perceive game engines as different from traditional frameworks and claim that engines need special treatments. 3

Game developers often add scripting capabilities to their engines to ease the design and testing workflow, meanwhile frameworks products are often written in the same programming languages.

A similar comparison between game and non-game software has been performed by Paul Pedriana from Electronic Arts17. Most notable differences are that game source and binaries tend to be rather large, they operate on much larger application data and do not tolerate any execution pauses. Moreover, by targeting non-desktop platforms such as consoles and handhelds, which often do not have paged memory and incorporate special kinds such as physical, non-local, non-cacheable and other memories, developers must practice great care when dealing with memory management As a result, game development requires a lot more effort put into the optimization of memory usage - every byte of allocated memory must be accounted for.

Other notable difference is almost complete lack of automated testing in game development.18 Correctness of the gameplay code is not particularly important, as usually there are no concrete definitions other than “it should feel right”. Unlike in other domains, user’s safety or savings are not at stake if a program contains a bug. It is very often vice versa - a peculiar bug might lead to discovering a new and compelling game feature that will pivot a game’s further development. Parts that might adopt partial test coverage will be restricted to low-level game engine code like physics routines, network matchmaking, algorithm correctness and similar.

Nonetheless, despite the differences in those two fields, Pesce points out that game developers should not shy away to learn from other fields, even from “wasteful” Python. In particular, web services tackle modularity and hot-reloading much more efficiently, while very few engines are designed to have well-separated concerns. Theory of API development, debating them and sketching the connections of subsystems on a whiteboard are common and useful practices that game developers tend to neglect.19

1.3. Terminology

So what is a game engine?

John Carmack, and to a less degree John Romero, are credited for the creation and adoption of the term game engine. In the early 90s, they created the first game engine to separate the concerns between the game code and its assets and to work collaboratively on the game as a team.3

A game became known as Doom, and it gave birth to the first-ever “modding” community (chpt 1.3)10. Thus, a new game could be made by simply changing game files and adding new art, environment, characters etc. inside a Doom’s engine. It is hard to draw a line and try to fit this engine into a taxonomy proposed by Toftedahl and Engström as it is both a Special Purpose engine, that creates a game in a specific genre; and a Core engine, that provides useful software utilities.

Another definition for a game engine that popular engines’ websites give is as follows: “A framework for game development where a plethora of functions to support game development is gathered”.15 Jason Schreier claims that a word “engine” is a misnomer:

An engine isn’t a single program or piece of technology — it’s a collection of software and tools that are changing constantly. To say that Starfield and Fallout 76 are using the “same engine” because they might share an editor and other common traits is like saying Indian and Chinese meals are identical because they both feature chicken and rice.20

Lastly, Politowski et al. gathered multiple definitions of “game engine”:

- collection of modules of simulation code that do not directly specify the game’s behavior (game logic) or game’s environment (level data);

- “different tools, utilities, and interfaces that hide the low-level details of the implementations of games. Engines are extensible software that can be used as the foundations for many different games without major changes… They relieve developers so that they can focus on other aspects of game development”.3

In brief, all those definitions imply that an engine is foremost a collection of software tools. Applications and a code that can be reused, and allows game developers to start working on game-related problem instead of writing general utilities from scratch. It is my belief that a “game engine” is an imaginary concept that is used as an umbrella term for software tools that help with creating video games, similarly as a word “dinner” is an umbrella term for a collection of meals. Therefore, for the purpose of this paper, “game engine” will imply an ecosystem around software development, which helps with producing video games in one way or another. Development environment potentially has a significant influence on the quality of a final product, and so cannot be ignored when designing a modern engine.

Video game modding (short for “modification”) is the process of alteration by players or fans of one or more aspects of a video game, such as how it looks or behaves.21 Mods may range from small changes and tweaks to complete overhauls, and can extend the replay value and interest of the game. We cover this topic in more details in Section 2.2.7.

During the analysis of programming languages, terms “low-level” and “high-level” will be used frequently. There is no clear answer what makes a particular language high- or low-level, but it is good comparison tool. A language like C can be perceived as low-level compared to Python, because a developer is responsible for memory management; but on the other hand C might be treated as high-level compared to x86 assembly, because a developer does not work with registers directly. Again, for the purpose of this paper, lower level will suggest that a language gives software engineers more control over the hardware. In cases where those terms do not refer to the code but rather to the architecture, “high-level” means “less nitty-gritty details”.

Cargo cult programming is the practice of applying a design pattern or coding style blindly without understanding the reasons behind that design principle.

GPU is a Graphics Processing Unit. Unlike CPU, GPU can have some thousands of cores, but they are specialized to do floating point operations and do calculations in bulk. Usually, to talk to a GPU, one needs to call graphics API like OpenGL, DirectX, Vulkan etc. A renderer (or rendering engine) is a part of a game engine that is responsible for drawing pixels onto the screen. A shader is a program that is sent to the GPU to be executed, and it “runs on each vertex or pixel in isolation”.22

An important distinction we must establish is the language of engine vs language of scripting. An engine needs to run a game fast. But game development cycle also needs to be fast. For this and other purposes, an engine can integrate a scripting language. Such language is more limiting than the language an engine is written in, but it allows skipping a compilation step every time there is a new change to the game. Scripting language provides only a subset of features provided by an engine. A scripting language can be virtually anything, from a simple custom parser to full-fledged languages like C#, Lua or Python.

Now with the theory covered, we take a look at some of the popular game engines, and gather more data from concurrent blog posts and articles.

2. Research

As we have seen, Unreal and Unity are currently the most widely used game engines. The worldwide demand for Unreal Engine skills is expected to grow 138% over 10 years.23 Therefore, it is, crucial to understand what makes both of those engines appealing towards game developers.

We begin this research by answering what set of features provided by Unity and Unreal appeals to game developers and make those features our guidelines. After finding the most important ones, we take a look at open source game frameworks and analyze them based on those guidelines. The difference in analysis between Unity and Unreal and their open source counterparts will be in our point of view. First ones will be viewed from the point of view of a regular game developer, while the rest from both the game and the engine developer perspective. Then we compare languages used in all of those engines and attempt to determine whether any of the language peculiarities have had a direct impact on engine’s architecture.

Although this research is purely theoretical, we encourage readers to try to apply the findings to their own projects, because creating a practical product is an integral part of a learning process: “The only way for a developer to understand the way certain components work and communicate is to create his/her own computer game engine”.11 The second-biggest reason why new engines are being developed is because of the learning purposes, with first reason needing more control over the environment. The actual need to create a game is only on a third place.3 Although this trivial reason only comes at a third place, as game developers, we must battle test our engines by making games with them, with boring fundamental gameplay systems. Not ignoring artistic aspects of game development can help us while writing a game engine. If we get too far into development without ever making games with it, we might discover that our engine cannot make games. Many engines are supposed to be used by users, not to be a tech demo.24 There is a difference between testing new features in an empty scene and implementing them into something with actual gameplay.25

It’s easy to get so wrapped up in the code itself that you lose sight of the fact that you’re trying to ship a game. The siren song of extensibility sucks in countless developers who spend years working on an “engine” without ever figuring out what it’s an engine for.26

2.1. Unity and Unreal

There are many reasons why a certain product can gain popularity. For starters, both Unity and Unreal have entered the market quite early. Unity’s first release was in 2005, and it received a lot of attention by being completely free to use, while first version of Unreal was announced back in 1998. [31] 20 years is ample time for a product to attract users and determine the hierarchy of consumer needs. Nowadays, both engines are used for more than mere video game creation. Unreal is often being hailed as the future of filmmaking. Movie industry benefits from using it because working in real time makes things more dynamic. One can quickly visualize scenes, frame shots and experiment with the film’s “world” before committing to filming. “Unreal Engine is an incredible catalyst for world building”.27 Pre-visualisation is an area where efficiency is of utmost importance. Results need to be handled quickly and artists must respond instantly to last-minute changes. To achieve success in such environment, artists need tools that will deliver instant feedback. Unity assists artists with their dedicated AR tools, which come pre-configured with good default options and can be used straight out of the box - “This includes a purpose-built framework, Mars tools, and an XR Interaction Toolkit”.23

Both products have helped with lowering the skill floor for beginner game developers, and this technical barrier only continues getting lower. Not only new games are more graphically superior but also are much easier to make for less-technical artists. “The trend is moving towards more and more automation of the process, and towards improving the quality of the product at the final stage.”28 If this pattern proves to hold, it will mean that fewer games will be made from scratch, and more reusable assets will be recycled to make new games. For a smooth experience of asset reuse, a solid marketplace will be needed: “One of the biggest opportunities is how real-time engines facilitate exchanges via the marketplace”.28 Indeed, one prominent advantage that both engines seem to possess is an online marketplace. An artist can scroll a catalog of assets and make a game prototype in a short time span.

Despite the abundance of both free and paid 3D models available on the market, the world still needs more 3D designers. “In the world of 3D design, demand is so high that there is more work to do than there are designers to do it”.29 Perfect photorealism is becoming both more accessible and more common in design. In the future, whether filmmakers will go for a traditional camera shoot or CGI implementation will be a question of budget.29 Consequentially, with the rise in demand for 3D art there also rises a demand for a software which allows working with it.

2.1.1. Unity

In his overview of Unity, Gregory writes that the ease of development and cross-platform capabilities are its major strengths (chpt 1.5.9)10. Upon further investigation of the features of this engine, all the pros can be broken down into 3 rough categories: portability, versatility and community.

2.1.1.1. Pros

Most of the sources that construe Unity’s popularity highlight how cross-platform support greatly assists during both production and post-production phases.

…it stands out due to its efficiency as well as its multitude of settings for publishing digital games across multiple platforms… developers select and focus their efforts on developing code on a specific platform without spending hours configuring implementations to make the application run on other platforms.30

Unity game can be run on more than 20 different platforms, including all major desktops, Android, iOS, consoles like PlayStation 4 (including PS VR), PlayStation 5 (including PS VR2), Xbox One, Xbox Series S|X, Nintendo Switch and web browsers.31 Apart from being able to cross-compile a game, developers are also given a powerful suite of tools for analyzing and optimizing a game for each target platform (chpt 1.5.9)10.

This might be the paramount reason why Unity has withstood the test of time and managed to retain their user base. According to the data published by Unity Technologies, 71% of the mobile games are Unity-based.32 The ability to run a game on whichever device a player might possess significantly increases the chances of your game being picked up and played. Especially as cross-platform games gain more and more popularity with each year.33 “The possibility of fully integrating games into web browsers, at a time when mobile devices have dominated the market, is probably the future of many digital games”.30

Unity’s versatility has extended its reach beyond regular game development, finding application in the fields that require real-time simulation such as the automotive industry, architecture, healthcare, military and film production.34 A good example of a “do it all” software - a seemingly bottomless toolbox that contains the instruments useful for any professional in any industry. There is no kind of game you cannot create with it, be it 2D, 2.5D, 3D, XR etc.30 Pixel art or photorealism, card game or platformer, fps or RPG. It does not matter. Its user interface is simple enough to start prototyping without coding. Coding part can be omitted entirely with visual scripting provided by Bolt.32 “All you should know is just how to make game art. Everything else can easily be done with Unity’s tools”.33

Those tools include XR instruments (60% of AR and VR content is made with Unity34), Built-in Analytics (Real-time data and prebuilt dashboards). Game Server Hosting, Matchmaker and Cloud Content Delivery allow developer to create multiplayer games and host them on a web server. Unity Build Automation and Unity Version Control can take care of DevOps aspects of game development (47% of indies and 59% of midsize studios started to use DevOps to release quickly) Asset Manager (in beta as of writing this paper) helps with managing a project’s 3D assets and visualizing them in a web viewer.33 This is only a tip of an iceberg, and for a full list of available features you can always refer to the official documentation. Not only those features are there, Unity also releases frequent updates accompanied by major fixes to software security vulnerabilities. To top it all off, a scripting language C# provides even more versatility with the ability to support both the back-end (Azure SQL Server with .NET) and the front-end (Asp.Net) of the application.30 Unity’s adaptability to modern trends is simply fascinating and is overall a good textbook example on how to keep a game engine up to date.

Lastly, a reason that is not exclusive to the game development and can also be equally perceived as the consequence of the two previous factors is the community. More specifically marketplace and learning materials. Unity Asset Store offers a vast collection of assets, plugins, and tools that can be easily integrated into projects, saving time and enhancing development efficiency. If there is some kind of tool one might need, there is a good chance that somebody has already made it and now sells it for a cheap price on the asset store. Thus, when starting a new project, teams don’t need to start from scratch because many of the prerequisites can be simply acquired from there and be imported into a project in a matter of few clicks. “It’s better to spend a hundred dollars on the Unity Asset Store instead of doing something that would cost twenty or thirty thousand dollars and two to three months to develop”.33 By staying on the market for so long and being used in various projects of a varying caliber, it is no surprise that abundance of tutorials and code snippets can be found online. The amount of learning materials for Unity exceeds those of any other engines out there, even Unreal.35

2.1.1.2. Cons

Now let’s discuss Unity’s shortcomings. Biggest one stems from its desire to be versatile. A freshly created, empty 2D project can weight up to 1.25 GB, and PackageCache folder up to 1 GB. Although certain dependencies can be manually removed from “Packages/manifest.json”, most of the modules are deeply intertwined with Unity’s core, and removing them would be shortsighted and could lead to bugs. This bulkiness is fine for the modern hardware, but a resource-hungry behemoth is not ideal when a game needs to be run on an older hardware or power-efficient devices. Unity was found to have issues with CPU and GPU consumption and modules related to rendering.30 Not everyone who wants to play video games can afford a modern computer or a phone. Unity also doesn’t support linking external libraries.32 This factor cripples its possibility to be modular. Additionally, with constant technological innovations, computing power can be placed inside many unconventional targets like smart fridges, digital watches, thermostats etc. But “while Unity’s cross-platform support is a significant advantage, it can also lead to suboptimal performance on certain platforms. Games developed in Unity may not perform as well as those developed using native game engines specifically designed for a particular platform”.35 Being able to play a Snake game on a smart oven would be a neat little feature worthy of buying it.

Moreover, despite showing a great adaptability, Unity Technologies’s leadership sometimes make for-profit business decisions that are often not perceived well by the community, such as one of their latest announcements about a “Runtime Fee”, which charges developers each time a game using the engine is downloaded.36 This decision was harshly rebuked by community and was eventually changed to apply only to games created with Unity Pro and Unity Enterprise. Nonetheless, this company has shown themselves capable of trying to pull the rug out from under developers and not hesitating to squeeze them into debt with per/install (released game) royalties and other loathsome shenanigans. Problems like this are absent, as a rule, in open source game engines, some of which we discuss later.

Lastly, Unity might suffer from an identity crisis. It is supposed to be a “build anything” engine used by both indie and triple-A studios alike, however many indie studios do not need “anything”, they simply need the tools to make a game of a particular genre. Trying to branch out into too many spaces at once instead of focusing on what they are good at is a sign of a capitalistic greed, and it might bite them in the future. When the abundance of specialized software exists - software that is designed to excel at a small subset of features, why would a company that needs those specific features pick Unity?

2.1.2. Unreal Engine 5

Similar to Unity, Unreal Engine can be used for much broader purposes than to just create video games. It is more fitting to think about it as a real-time digital creation platform used for creating games, visualizations, generating VFX and more.37 In particular, filming industry has been eagerly adopting it in recent years, being successfully used in numerous popular movies and TV series like “Fallout”, “Love, Death + Robots”, “Mandalorian”, “House of the Dragon” and many more.38,27 Virtual Camera system allows controlling cameras inside the engine using an iPad Pro.37 Gregory claims that this engine has the best tools and richest engine feature sets in the industry, and that it can create virtually any kind of 3D game with stunning visuals (chpt 1.5.2)10.

Unlike Unity, however, its huge arsenal of tools does not seem to be forcing a company to grow in every direction all at once. Unreal’s identity is strikingly clear - its job is to produce the best-looking graphics possible. It is also mindful that plenty of AAA studio with large teams are using this engine and that team roles there are more diverse than in smaller studios, so the tools that are given to the artists vary in degree of complexity but are rather artist-friendly, and the workflow for meshes and materials is similar to that of native 3D software like Blender or Maya. Visual scripting in a form of Blueprints is an integral part of an engine’s world editor (unlike visual scripting solutions in Unity that exist only as external plugins). Built-in version control system allows including a new team member into a project and easily merge new changes into a scene that a team is currently working on. Arguably Epic Games’ most famous project Fortnite not only showcases some of the extents of its engine, but also serves as a platform for game development with Unreal Editor for Fortnite (UEFN). Games created with UEFN can be directly published to the Fortnite platform, reaching a built-in audience of millions.39

2.1.2.1. Graphics

Undoubtedly, the primary driver behind Unreal Engine’s widespread adoption is its ability to produce stunning visuals. Let’s delve deeper into these graphics-centric advancements. Three of the most talked about technologies are Lumen, Nanite and Virtual Shadow Maps.

Lumen is a global illumination and reflections system. It is fully dynamic which means there is no need for light baking. Its “primary shipping target is to support large, open worlds running at 60 frames per second (FPS) on next-generation consoles”.40 It does not work on PlayStation 4 and Xbox One. Lumen provides 2 methods for ray tracing: software ray tracing and hardware ray tracing. Software ray tracing is more limiting as there are restrictions on what kind of geometry and materials can be used e.g. no skinned meshes. Lumen Scene operates on the world around the camera, hence fast camera movement will cause Lumen Scene updating to fall behind where the camera is looking, causing indirect lighting to pop in as it catches up. This system works by parameterizing surfaces in a scene into Surface Cache which will be used to quickly look up lighting at ray hit points. Material properties for each mesh in a scene are captured from multiple angles and populate Surface Cache. Lumen calculates direct and indirect lighting for these surface positions, including sky lighting. For example, light bouncing diffusely off a surface picks up the color of that surface and reflects the colored light onto other nearby surfaces, also known as color bleed. Diffuse bounces are infinite, but Lumen Scene only covers 200 meters (m) from the camera position (when using software ray tracing). For the fullest potential of Lumen, it is recommended to use hardware-accelerated ray tracing, which raises a question how much of a Lumen’s success lies with its software innovation as opposed to just the utilization of new features provided by the latest hardware of graphics cards.

Nanite is a Level Of Detail (LOD) system. “A Nanite mesh is still essentially a triangle mesh at its core with a lot of level of detail and compression applied to its data”.41 Nanite can handle orders of magnitude more triangles and instances than is possible for traditionally rendered geometry. When mesh is first imported into a scene, it is analyzed and broken down into hierarchical clusters of triangle groups. This technique that might be similar to Binary Space Partitioning. “During rendering - clusters are swapped on the fly at varying levels of detail based on the camera view and connect perfectly without cracks to neighboring clusters within the same object”.41 Data is streamed in on demand so that only visible detail needs to reside in memory. Nanite runs in its own rendering pass that completely bypasses traditional draw calls. Overall, this addition to an engine allows building extremely complex open worlds with astonishing amount of details. Filming industry can abuse Nanite by importing high fidelity art sources like ZBrush sculpts and photogrammetry scans. Although it is good for complex meshes when doing cinematography, there are struggles with rendering a game at 60 FPS, in which case a manual optimization of LODs is preferred over relying on automatic LODs from Nanite.42

Virtual Shadow Maps (VSM) is a shadow mapping method used to deliver consistent, high-resolution shadowing. They need to exist in order to match highly detailed Nanite geometry. Their goal is to replace many stationary lights with a single, unified path. At the core they are regular shadow maps but with high resolution (16k x 16k pixels). However, in order to keep performance high at reasonable memory cost, VSMs are split into Pages (small tiles) that are 128x128 each. Pages are allocated and rendered only as needed to shade on-screen pixels based on an analysis of the depth buffer. The pages are cached between frames unless they are invalidated by moving objects or light, which further improves performance.43 Since VSMs rely on Nanite, they might suffer from similar performance issues.

There are many more fascinating tools and features in this engine like Datasmith (import entire pre-constructed scenes), Niagara (VFX system), Control Rig (character animations), Unreal Insights (profiling) and an elaborate online subsystem which aids with handling asynchronous communication with a variety of online services. Unfortunately, the review of those modules as well as popular plugins is a topic on its own and deserves a separate paper. We do take a brief, high level look at the architecture of its codebase, however, before exploring what drawbacks are present in this engine have.

2.1.2.2. Architecture

Unreal’s build system is called UnrealBuildTool. A game is a target built with it, and it comprises from C++ modules, that implement a certain area of functionality. Code in each module can use other modules by referencing them in their build rules, which are C# scripts. This system is not much different from CMake, autotools, meson etc. Modules are divided into 3 categories: Runtime, Editor functionality, and Developer tools. Gameplay related functionality is spread throughout Runtime modules, some of the most commonly used are:

- Core: “a common framework for Unreal modules to communicate; a standard set of types, a math library, a container library, and a lot of the hardware abstraction”.44

- CoreUObject: a base class for all managed objects that can be integrated with the editor. It’s the central object in the whole object-oriented model of an engine.

- Engine: functionality associated with a game (game world, actors, characters, physics, special effects, meshes etc.).

Modules enforce good code separation, and although it does not necessarily make Unreal modular in a traditional sense, developers are able to specify when specific modules need to be loaded and unloaded at runtime based on certain conditions. Include What You Use (IWYU) option further helps with compilation speeds - every file includes only what it needs, instead of including monolithic header files, such as Engine.h or UnrealEd.h and their corresponding source files. As for the gameplay programming, scripts are written in C++ like an engine itself, and they use inheritance to expand the functionality of new objects (Actors). There is a visual scripting language called Blueprints that allows to create classes, functions, and variables in the Unreal Editor. Another option for scripting is Python, however it is still in experimental stage. It is a good option when one needs to automate his workflows within the Unreal Editor.

2.1.2.3. Cons

As for the drawbacks of this engine, perhaps the biggest one would be the consequence of trying to optimize toward the next generation graphics targets. All those innovative systems listed earlier cannot be fully taken advantage of if a game is being run on moderately powerful graphics cards prior to 20 series.45 Out of all game engines we discuss in this paper, Unreal editor is the most resource hungry and causes frequent stuttering. These performance issues make it hard to recommend this engine for developers with a limited budget and who want to target a variety of dated platforms.

Another noticeable issue arises when working with C++. Oftentimes writing scripts feels like writing a new dialect of C++ where one needs to learn specific macros and naming conventions. A lot of Unreal’s code has poor encapsulation. Many member variables are public in order to, presumable, support the editor and blueprint functionality. Some public member functions should never be called by users, like the ones that manage the “lifetimes” of objects, but they are called by other modules of an engine. This clutters the interface of many objects, making it difficult to figure out what are the important interface features of unfamiliar classes. It is also difficult to make use of RAII due to how Unreal Engine handles the creation of UObject-derived objects. Managing the state of the objects via initialization/uninitialization functions requires more caution compared to traditional C++, and it is not uncommon for complex objects to have certain parts with a valid state while the rest is not.

Other cons are less problematic and are rather subjective. For example people on forums claim that the documentation is lacking. What they actually imply is that either API reference is outdated (which is often the case for massive projects with frequent release cycles), or that a manual does not explain certain game development concepts in enough details, which is hardly an engine’s fault. A steep learning curve is not a problem but a price that game developers need to pay to work on next generation games. Tim Sweeney, a founder of Unreal Engine says

You can’t treat ease-of-use as a stand-alone concept. It’s no good if the tools are super easy to get started with, so you can start building a game, but they’re super hard to finish the game with, because they impose workflow burdens or limited functionality.46

Workflow for 2D games is not great, but from the very start of this engine, it was focused on developing shooters or first-person games.30 2D is simply not its specialization to begin with. A need to use C# for build scripts is a peculiar decision, but there is no universal way to build C++ applications and probably never will be, this is a problem of the infrastructure around the language of choice and not of the engine. Lastly a marketplace is big, and it is integrated very well into the engine and Epic store, but it certainly could be improved. There are not enough filters to search for assets, and many of them are either unfinished, broken or have licensing issues which makes them impossible to use in commercial products.

2.1.3. Takeaways

Unity and Unreal stand as titans in the game development landscape. Their long histories as free game engines make it a challenge for newcomers to dethrone them from the top. Both implement a royalty system for commercially successful games (Unreal at 5% above $1 million USD in sales, and Unity with variable plans). Source code of both engines is proprietary, but can be accessed nonetheless: Unreal on GitHub and Unity by paying for Enterprise or Industry editions. Games made with Unreal seem to scale up better than Unity, as Epic appears to have built a more robust infrastructure around the whole process of game development instead of simply giving developers the tools to make vide games. Meanwhile, Unity struggles with handling AAA games with large landscapes and heavy on-screen elements.33 Yet it appears to be a better choice for newcomers, as the skill floor is lower and the amount of learning materials (mostly community generated) is higher. It is also an engine of choice for serious games, as a final game can be easily exported to more platforms (including web browsers) with a price of using more computational resources, and the diversity of possible game genres and themes is superior to that of Unreal.

Neither engine, however, provides any meaningful tools to solve the problems of the pre-production phase of game development that were described in 2. Requirements specifications for emotions, gameplay, aesthetics and immersion exist outside the engine, which makes developers not responsible for adhering to those requirements. This opens an opportunity for incorporating high level game system description languages (external schema) into the engine, which in its turn requires Game Design Documents to be an integral part of an engine.

Importantly to the objective of this paper, we distinguished 4 key elements responsible for the popularity of a game engine:

- Portability. Developers want to work on games instead of figuring out how to port them to certain platforms. The more target platforms a game engine supports - the more likely it is to be used. NOTE

To develop for consoles, one must be licensed as a company. Console SDKs are secret and covered by non-disclosure agreements. Therefore, no game engine under an open source license is allowed to legally distribute console export templates.

- Versatility. Nobody knows how a final game will look like from the start and what systems will it need. Having every possible tool available is a safety precaution that permits to do drastic changes that can impact a game’s future vector of development

- Graphics. Even when visual fidelity is not the primary goal, everybody wants their games to look beautiful.

- Community. Games are not created in isolation from scratch. A good engine needs to have a straightforward way of integrating community generated content, be it code snippets or assets. For these purposes, it is crucial to have a first-class support for developing external plugins and assets via a marketplace. Knowledge needs to be passed and re-applied, and a game engine can serve as a playground to apply this knowledge and to battle test new ideas and techniques.

Now with those four criteria in mind, we compare some of the popular open source engines as well as try to find what other points need to be considered when designing a game engine.

2.2. Engine Analysis

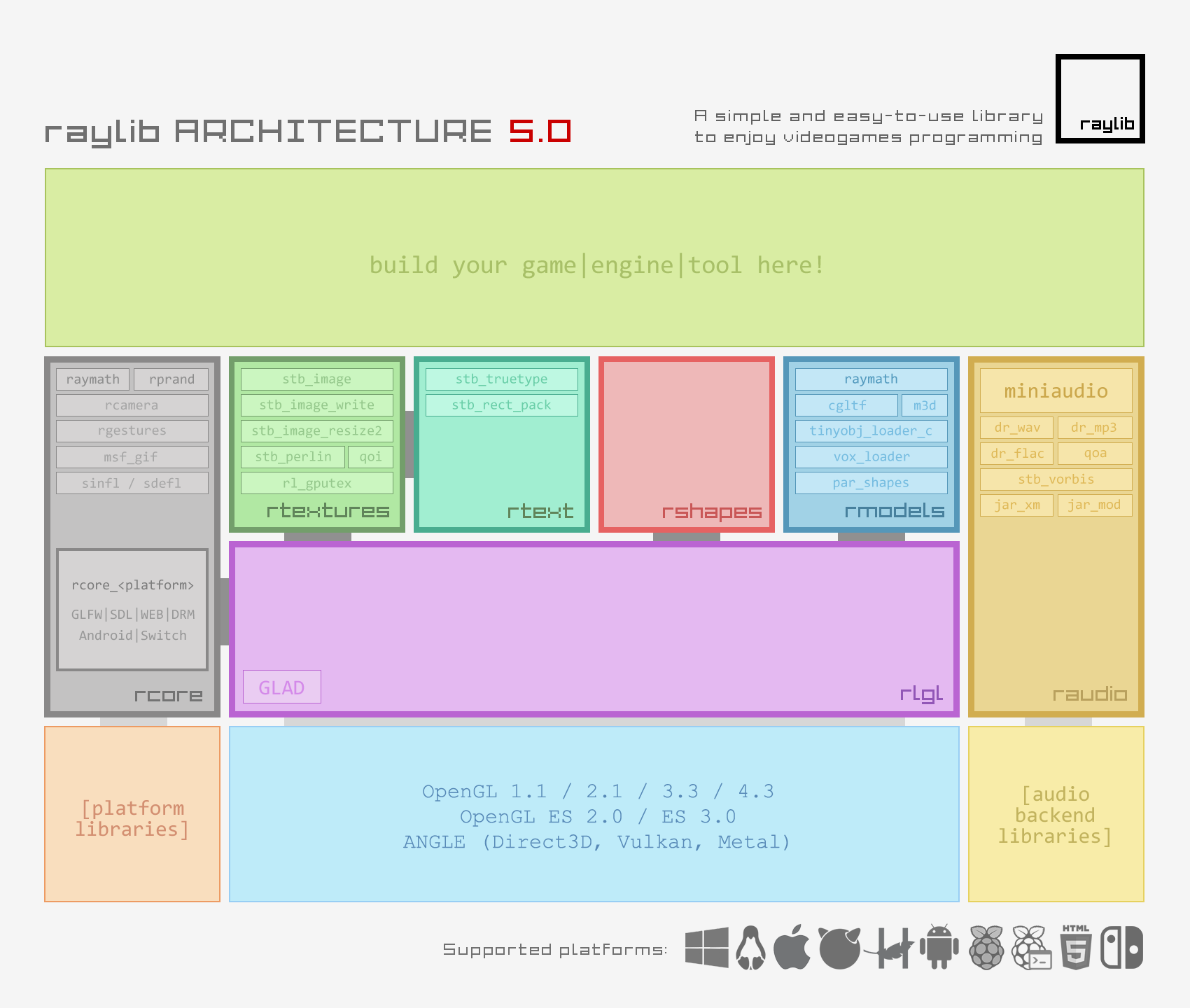

This section begins by examining the research questions posed by Anderson and friends6 which will serve as a starting point for formulating the requirements of a next generation game engine. We then analyze the Runtime Engine Architecture (RTEA) introduced by Gregory and define the responsibilities of each module. Then we take a look at common game world models that describe how game objects are to be managed inside the engine. This is followed by a comparative analysis of several open source engines and frameworks: Raylib, SDL2, Bevy and Godot; contrasting their architecture with the RTEA model. Finally, we expand on the potential responsibilities of a general purpose engine and whether game modding should be treated as the extent of game development.

Questions, or rather topics of concern, raised by Anderson et al. are as follows:

- The lack of standardized “game development” terminology. How to properly define any given engine and each of its components as well as other aspects relating to game development?

- What is a Game Engine? Where’s a line between a complete game and an engine that was used building it?

- How do different genres affect the design of a game engine? Is it possible to define a game engine independently of genre?

- How do low-level issues affect top-level design? Are there any engine design methods that could be employed to minimize the impact of the future introduction of new advancements in computer game technology?

- Best Practices: Are there specific design methods or architectural models that are used, or should be used, for the creation of a game engine?

Since we will be revisiting those questions frequently throughout the entire paper, and we do not want to confuse them with the research questions of this paper, we need to come up with a convenient acronym for them. To do the justice to all authors, we will combine 2 letters of their family names into one word: AN-EN-LO-CO; consequently the questions will be ANENLOCO-[1-5]. ANENLOCO-5 requires a separate quantitative research and is outside the scope of this paper. ANENLOCO-2 was partly answered in Section 1.2 - game engine refers to the whole software development ecosystem which helps with producing video games. Whether such treatment is optimal or not is certainly up to debate, but in order to make the most diligent research, we need to take into account not only the runtime components of the engine, but also third-party tools used for creating game assets. By taking them into account, we gain a better ability to trace the roots of any particular problem - whether a tool creates it and leaves it to the engine to solve, or merely adapts to the need of an engine which created a problem in the first place. To answer ANENLOCO-4 and not viewing the whole picture of the entire game’s development stack is, at the very least, nearsighted.

2.2.1. Runtime Engine Architecture

First distinction Gregory makes is separating a tool suite from runtime components (chpt 1.6)10. Tools include the software used for asset creation like Blender and Maya for 3D meshes and animations, Krita and Photoshop for textures, Ardour and Reaper for audio clips, etc. Those digital content creation (DCC) tools are separated from the engine and do not need to know anything about each other. Tool suite also includes a version control system and instruments around a programming language (compiler, linker, IDE). With other elements this distinction becomes less apparent - what should be a part of the RTEA, and what should belong to a tool suite?

For example, performance analysis tools (profilers) vary in degree of complexity: some of them are standalone programs that only require a compiled executable, such as Valgrind, while others are designed to be injected into code to profile certain parts of a program, like Tracy. Similarly, debugging can be done either with an executable using GDB, or a primitive manual console-logging/line-drawing for a quick and dirty visual feedback. Even operating system submodule is ambiguous and cannot be easily classified into neither a tool suite nor RTEA, as it provides a slew of drivers, system packages and a libc, while simultaneously being the foremost important tool for any artist or developer and the core dependency of every DCC.

Another element of a tool suite is the asset conditioning pipeline. It is the collection of operations performed on asset files to make them suitable for use in the engine by either converting them into a standardized format, a custom format supported by the engine or a binary. To put it simply, it is a file compression/decompression system. The issue with this module is that a lot of DCC software allows integrating custom plugins that will take care of exporting an appropriate format from them, eliminating the asset conditioning pipeline altogether, making this module entirely arbitrary if it doesn’t describe the contract between DCC and the engine.

A similar peculiarity also strikes Resource Management (RES) module. It provides an interface for accessing different kinds of game assets, configuration files and other input data, making it a logical cog in the machinery of the engine. However, to scale a game up painlessly, an engine needs a database in order to manage all metadata attached to the assets. This database might take a shape of either an external relational database like NoSQL - thus making RES depend on the unaccounted for DCC software; or a manual management of text files, which than will be handled exclusively in the engine’s RES module.

Lastly, the icing on the cake, a cherry on top is the World Editor (EDI) - a place “where everything in a game engine comes together” (chpt 1.7.3)10. “Scene creation is a large part of game development and in many cases visual editors beat code”.47 Consequently, to have a visual world editor an engine needs to be logically assembled, which makes this submodule to reside at the top of the dependency hierarchy. It can be architected either as an independent piece of software, built on top of lower layer modules, or even be integrated right into a game. A world editor allows to visually create and manage both static and dynamic objects, navigate in a game world, display selections, layers, attributes, grids etc. Some examples of world editors integrated into games are shown bellow. Weirdly, Gregory classifies a World Editor as a part of the tool suite.

Runtime components are what makes up the rest of the typical engine, and normally they are structured in layers with a clear dependency hierarchy, where an upper layer depends on a lower layer. Similarly to a tool suite, it is not always obvious which category a certain submodule should belong to. Third-party SDKs and middleware (SDK) module lay at the lowest layer of RTEA right above an operating system (OS). SDK is the collection of external libraries or APIs that an engine depends on to implement its other modules. This might include container data structures and algorithms; abstraction over graphics APIs; collision and physics system; animation handling etc. Platform Independent Layer (PLA) acts as a shield for the rest of the engine by wrapping platform specific functions into more general, platform-agnostic ones. By doing so, the rest of the engine gets to use a consistent API across multiple targeted hardware platforms, as well as having the ability to switch certain parts of SDK with different ones. Core Systems’ (COR) exact definition is vague: “useful software utilities”, but some of its responsibilities include: assertions (error checking), memory management (malloc and free), math, common data structures and algorithms, random number generation, unit testing, document parsing, etc. Such breadth of facilities inadvertently raises a question whether a standard library provided by a language can be considered a Core engine system. We discuss this possibility in Section 3.4. These three components (SDK, PLA, COR) as well as RES and potentially OS form the base for any game engine, even in simple cases when the entire functionality can reside in a single file.

Another essential collection of modules is grouped into a rendering engine. Rendering is the most complex part of an engine and, similarly to base components, most often architected in dependency layers. Low-Level Renderer (LLR) “encompasses all of the raw rendering facilities of the engine… and draws all of the geometry submitted to it” (chpt 1.6.8.1)10. This module can be viewed as Core but for a rendering engine. Scene Graph/Culling Optimizations (SGC) is a higher-level component that “limits the number of primitives submitted for rendering, based on some form of visibility determination”. Visual Effects (VFX) includes particle systems, light mapping, dynamic shadows, post effects etc. Front End (FES) handles UI, aka displaying 2D graphics over 3D scene. This includes heads-up displays, in-game graphical user interface and other menus.

The rest of RTEA consists of smaller, more independent components: Profiling and Debugging tools (DEB), Physics and Collisions (PHY), Animation (SKA), Input handling system (HID), Audio (AUD), Online multiplayer (OMP) and Gameplay Foundation system (GMP) which provides game developers with tools (often using a scripting language) to implement player mechanics, define how game objects interact with each other, how to handle events, etc. Taking a look back at Unity, GMP’s subsystems in there are by far the most scarce out of all modules - the burden of defining and implementing them is entrusted onto game developers. Such approach allows Unity to be virtually genre-agnostic, and as such gives us a strong hint to answer ANENLOCO-3 - defining an engine independent of a game’s genre.

The last component is called Game-Specific Subsystems (GSS). Those are the concrete implementations of particular gameplay mechanics that are individual to every game, and highly genre-dependent. That’s a component the responsibility for which gradually shifts from programmers towards game designers. “If a clear line could be drawn between the engine and the game, it would lie between the game-specific subsystems and the gameplay foundations layer” (chpt 1.6.16)10. Therefore, this piece lies outside RTEA, as those subsystems are genre-dependent and closely coupled with a final game, giving it its unique characteristics. Gregory’s statement gives us a hunch to the answer for ANENLOCO-2, however he then adds that on practice this line is never perfectly distinct, which further enforces our point that an engine is just a notional term.

Regarding this, an interesting point is made by Fraser Brown, a developer of a strategy game “Hearts of Iron” by mentioning that an engine of their game is actually split into two parts. One is called Clausewitz, which is “a bunch of code that you can use to make games. You could use it to make a city-builder, a strategy game, an FPS… not that it would give you any tools for that, but you could…”48 And another is Jomini, which is “specifically for the top-down, map-based games”. Yet despite there being two of them, “the pair are two halves of the same engine”. This separation allows them to share the core technology across their other projects and make a development cycle much faster. It is safe to assume that Jomini is where a game’s genre is being determined, and it seems that it accomplishes the same tasks described in GMP and GSS modules of RTEA.

Despite the fact that Gregory lists in great details possible submodules for each runtime component, the biggest issue of his architecture arises with the inclusion of multiple SDKs which act not as independent utilities but rather as mini frameworks encompassing the functionality of multiple components. In other words, unless an engine is written entirely from scratch, the addition of external libraries risks in an engine to have multiple places where one particular logic is being implemented, which causes the ambiguity of concerns and really hurts its modularization. Thus, tight coupling between subsystems becomes inevitable as a project grows in size, but there is nothing supernatural about it, as was manifested by Ullmann and the crew.12 The problem is not with the coupling per se, but rather with the lack of a universal description of this engine subsystems coupling - an issue described by ANENLOCO-1. When a single external library implements the functionality of multiple submodules, it is up to an individual engine developer to decide how to design the architecture with multiple external libraries that require each other’s functionality. Unfortunately, “often authors present their own architecture as a de facto solution to their specific problem set”.6 This dilemma is what causes game engines to still be a gray area in academic circles, as was mentioned in the beginning.

As an example, let’s take a look at bgfx - a cross-platform rendering library written in C++. Trying to fit it inside RTEA proves to be a cognitive challenge as there are 4 potential runtime components that are mainly or partially responsible for providing the functionality supplied by bgfx. First is obviously SDK - an interface that allows an engine to talk to the hardware; 2) PLA, as it provides a unified API across different platforms; 3) LLR - draw whatever geometry is submitted to it (geometry primitives are not included); 4) SGC - occlusion and LOD for conditional rendering. Thus, if an engine is to be built on top of bgfx, its architecture would differ from RTEA because its rendering engine would be split in two: two out of four components (LLR, SGC) are provided by an external package, and other two (VFX, FES) will have to be either implemented in the engine, or be imported from another package (it is extremely unlikely that a library will provide VFX and FES functionality independently of a rendering engine). Apart from bgfx, there are countless game-related frameworks varying in caliber that are meant to be used as either a part of another engine or a self-sufficient tool to make games.

While Gregory’s RTEA is not the only framework for game engine design, as was pointed out by 12, its comprehensive delineation of components makes it an extremely valuable model for understanding the complex workings of modern game engines. An alternative to splitting game development tools into engine components (RTEA) and tool suite (DCC) is proposed by Toftedahl and Engström in their “Taxonomy of game engines”. Engine is split into 3 groups: 1) Product-facing tools which is essentially most of the engine’s functionality; 2) User-facing tools allow designers and game developers to create game content (GMP); 3) Tool-facing tools are the plugins that bridge different components of an engine with DCC.15

Having explored the basis of RTEA, we can turn our attention to the architecture of a game world - the design and communication patterns of objects within the game itself. While engine architecture focuses on the underlying systems that power the game, game world model deals with the structure of the game’s content and logic. This distinction is crucial in answering ANENLOCO-2, “Where is the line between a complete game and the engine used to build it?”.6

2.2.2. Game World Model

First, we need to understand the principles of a software architecture, and why is it important to have one in a project. Every program has some organization, even if it’s just “jam the whole thing into main() and see what happens”.26 Writing code in such way and without a second thought will get increasingly harder the further it gets. A software architecture is a set of rules and practices for the code based on the project’s goals. If there’s two equally good ways to do something, a good architecture will pick one as the recommended approach. Each feature or component should be implemented similarly to the other features and components.49 For a game development example, if a design requires a developer to spawn an enemy at a random location, a good architecture should specify which random number generation algorithm to use.

Joanna May, a Godot game developer, suggests that “a good architecture should take the guesswork out of what should be mundane procedures and turn it into something you can do in your sleep”49 and lists following points that a good architecture should describe:

- Organization: where to put code and assets;

- Development: what code to write to accomplish a certain feature;

- Testing: how to write tests;

- Structure: how to get and use dependencies;

- Consistency: how to format code;

- Flexibility: what happens when there’s a need to refactor something.

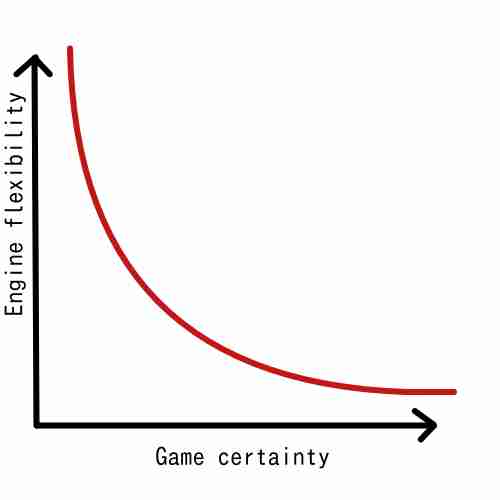

Robert Nystrom, an author of “Game programming patterns” book, additionally stresses that “Architecture is about change. The measure of a design is how easily it accommodates changes”. The more flexible a game is, the less effort and time it takes to change it, which is paramount during game development’s production phase, as good design requires iteration and experimentation. However, gaining high level of flexibility means “encoding fewer assumptions in the program… but performance is all about assumptions. The practice of optimization thrives on concrete limitations”. For example, knowing that all enemies in a level are of the same type, it becomes possible to store them in a single static array, which makes iterating on them faster, as opposed to storing pointers to different enemy classes and dereferencing them every loop iteration. This quandary is reminiscent of Heisenberg’s uncertainty principle: the more you focus on optimization, the more difficult it becomes to introduce changes into the game, while the more flexible it is, the fewer places for optimization there are. Bob brilliantly concludes that “There is no right answer, just different flavors of wrong”, yet in his opinion it is “easier to make a fun game fast than it is to make a fast game fun”.26 This is partly what Ms. May implies by a second key goal of a good architecture: what kind of code to write to hit the optimal middle ground between flexibility and performance. If we take a further look back, we see that exact same question is raised in ANENLOCO-5.

Another goal of software architecture is to minimize the amount of knowledge a developer is required to have before he can make progress.26 This is why software introduces abstraction layers - to hide the complexity of underlying lower level systems behind a couple of function calls. Abstraction also helps with removing duplication, which many software engineers are taught to avoid in pursuit of a clean code, but this is a double-edged sword. When adding an abstraction layer, you are adding extra complexity and speculating that it will be needed in the future either by yourself or other developers. When it is - congratulations, you have become the next Dennis Ritchie; but when it only covers some of the cases, and there is a need to pass extra parameters to cover edge cases - the opposite of the original goal was achieved. Now developers need to understand more code to add new changes. “When people get overzealous about this, you get a codebase whose architecture has spiraled out of control. You’ve got interfaces and abstractions everywhere. Plug-in systems, abstract base classes, virtual methods galore, and all sorts of extension points”.26 Regarding this problem, Sandi Metz remarks that “Duplication is far cheaper than the wrong abstraction”.50 Although in theory it is simple to follow, in reality there is almost never an obvious answer when the introduction of new abstraction is safe, let alone mandatory. Since one of the aims of this paper is to discover optimal organization of game engine components and how they can be reused, I believe that at the lowest level there should be as little abstraction as possible, because abstraction implies generalization of a problem which in turn prevents applying the optimal solution to niche problems at critical sections.

Having understood the purpose of a good architecture, let’s see how it works in practice. Once an aspiring game developer has planned out the entire plot and gameplay for his magnum opus game of the year, and has picked the according tools to build such a game, it is time to start populating a game world with static (meshes of buildings, vegetation, terrain, etc.) and dynamic (enemies, main character, etc.) objects. Those objects are often called by a mysterious name “game object” (GO) or in some cases “entity”. Anything that can be placed into a game world is a game object. A game world consists of one or more “game scenes” (levels), which are loaded and unloaded on demand. But how does one describe a game scene - what are the relations between GOs, how to update them and in which order, how to store them in memory, where should the logic of operating on those objects live? This is the job for Game World model - the specification of how to organize base building blocks for every gameplay-related aspect. From the information I could gather, there are 4 common models, which take roots from regular software development paradigms.

2.2.2.1. Procedural Model

The simplest way to organize GOs is to do it in good ol’ days without abstraction - break them down into small data structures and functions (procedures) that perform logic on them. Original Doom was written this way, as well as many other games in 80s and 90s when procedural way of programming was widespread. Early graphics APIs were also based on this approach. Unlike two other models that we take a look at next, both of which can be described by set of restrictions, procedural model, in essence, is the absence of restrictions - anything goes. It is the most straightforward and least interesting approach towards GOs organization, but it is considered to be efficient both in terms of performance and memory usage51 - key concerns for graphics rendering. The biggest downside of such model is how difficult it is to scale a world because every action of a GO requires a separate procedure that cannot be easily shared by other GOs. Hence, for modern game development in AAA studios this strategy is but a relic of the past, although for simpler games with small teams such strategy is perfectly viable.

2.2.2.2. Object-Component Model (OCM)

Robert Anguelov, a game engine developer, explains this model and gives it this name in his video presentation.24 This model is based on classic Object-Oriented programming in software engineering and has evolved over years and became the de facto model in modern game development, however it is not standardized and there are numerous different flavors. The basic principle is that a GO owns components. For instance, Player has Movement, Camera, Weapon and whatnot. (many engines have different names for a parent object: Actor, Pawn, Entity, etc.) GOs have their own init and update logic, while components may or may not have it (depends on an engine). GO can reference other GOs and components. Component stores data (references, settings, runtime state) and logic. It can reference other components and GOs. GOs and components can form hierarchies to avoid unnecessary duplication - derive different enemies from a base enemy pawn. Spatial hierarchies, in particular, are not only possible but are fundamental to describe the location of GOs in a scene. In Unreal Engine a Scene object (root) has a transform component and every GO’s position in a world is relative to that transform. Other engines might implement different spatial data structures such as scene graphs, spatial partitioning, and bounding volume hierarchies.13

OCM offers several significant advantages in game world architecture. Primarily, it allows for comprehensive representation of scene elements - GO can describe an element of the scene in its entirety (e.g. a character comprising a capsule, 5 skeletal meshes, 1 static mesh and all the logic). This conceptual simplicity facilitates intuitive reasoning about what exactly should belong to which object, which vastly simplifies the job for designers. OCM’s friendly scene-tree approach allows for easy visualization and navigation in complex game worlds as well as creating tooling (world editor integrations) to manage it. Such structure is modding friendly as it allows creating new objects by simply tinkering their components via scripting.52 Although not common, but OCM naturally goes well along with a feature-based file structure where any files that get shared between GOs live in the nearest shared directory instead of being split into ‘scripts’, ‘textures’, ‘scenes’ and likes. For instance, everything a “coin” needs lives in a coin directory - code, textures, audio, etc.49

Nevertheless, there are several disadvantages to be aware of, which are mostly derived from the typical problems faced in regular OOP like deep hierarchies or diamond inheritance. Firstly there are dangers of “Reference/Dependency hell” - functions like GetOwner(), GetObject(), GetComponent() can return references from anywhere which can cause cyclic dependencies and deadlocks. To solve this matter, one needs to be explicit with the order of initialization (and consequentially the update order with something like update priority) and data transfer, which is quite an undertaking when the amount of unique objects exceeds few hundreds or even thousands. Another way to solve this is demonstrated in Unreal Engine by unifying components - USkeletalMeshComponent is a combination of animation, deformation, physics, cloth and some other components. This approach of data storage is not cache friendly because data is not stored near each other in memory, however due to the power of modern CPUs, this shortcoming is not manifested in average-sized games, and only becomes apparent in large projects. Most codebases with OCM end up a web of inter-component/object/singleton dependencies, which is nearly impossible to untangle partly because there are hundreds of ways to decompose the GO system problem into classes, and partly because of the time constraints faced in game development, which often result in “prototypes” being the final solutions. This in turn limits the reusability of components, which was one of the primary goals of this approach.24

OCM fosters many design patterns applicable to regular OOP such as observer (subscribe to events of an object) and state (alter object’s behavior based on its state) patterns, as demonstrates Joanna May within Godot engine.

State machines, their big brothers state charts and behavior trees are integral part of almost any game and are not exclusive to this model.

She separates responsibilities into 3 levels: the lowest is called “Data layer” which is essentially most of the engine’s modules described by RTEA except GMP; higher in hierarchy is a “GameLogic layer” which is responsible for manipulating the game and its mechanics (GMP module of RTEA + Game-Specific subsystems (GSS)); and at the highest level is “Visual layer” - pure visual representation of GOs in a scene without its own logic, governed by a state machine. GameLogic layer is additionally split into two parts:

- Visual GameLogic - “code that drives their visual components by calling methods on them or producing outputs that the visual game component binds to”. In other words state machines that are specific to certain visual components. “An ideal visual component [from Visual layer] will just forward all inputs to its underlying state machine [Visual GameLogic layer]”.

- Pure GameLogic - “game logic that’s not specific to any single visual component. Implements the rules that compromise a game’s “domain” [genre]. A good state machine, behavior tree, or other state implementation [Visual GameLogic] should be able to subscribe to events occurring in repositories [Pure GameLogic], as well as receive events and/or query data from the visual component [Visual layer] that they belong to“.49

Suggested architecture perfectly complements Gregory’s RTEA (which is also object-oriented at the core) and in particular explains how to organize Game-Specific Subsystems which are not part of the game engine itself. It’s worth mentioning that Gregory differentiates two distinct but closely interrelated object models:

- Tool-side object model - GOs that designers see in the editor;

- Runtime object model - language constructs and software systems that actually implement tool-side object model at runtime. It might be identical to the tool-side model, or be completely different (chpt 15.2.2)10.

Somewhat similarly, Scott Bilas a developer of Dungeon Siege, distinguishes Static GO hierarchy - “templates that determine how to construct objects from components”, and Dynamic GO hierarchy - “GOs as described by a template that are created at runtime, die and go away”.53 Hierarchy of static objects, as well as their base properties can be described by external schema. The reason for this is that “designers make decisions independently of engineering type structures and will ask for things that cut across engineering concerns”.

2.2.2.3. Entity-Component-System (ECS)

This rather radical architecture gained popularity very recently treats GOs (entities) as a database and game logic is performed by querying specific components.52,53 The purpose of this model is to decouple logic from data to allow a game to be highly modular and flexible. An entity is simply an ID, meaning it doesn’t exist as an actual object, and components are pure data containers. Logic is moved into systems and systems match on entities with specified components. All update logic resides in them which makes them highly parallelizable. Just like in OCM, there is no one standard way to define an ECS model and there are many different flavors of it.24